AI tools for HuggingFace models integration with Chat MLX

Related Tools:

MindsDB

MindsDB is an AI development cloud platform that enables developers to customize AI for their specific needs and purposes. It provides a range of features and tools for building, deploying, and managing AI models, including integrations with various data sources, AI engines, and applications. MindsDB aims to make AI more accessible and useful for businesses and organizations by allowing them to tailor AI solutions to their unique requirements.

vLLM

vLLM is a fast and easy-to-use library for LLM inference and serving. It offers state-of-the-art serving throughput, efficient management of attention key and value memory, continuous batching of incoming requests, fast model execution with CUDA/HIP graph, and various decoding algorithms. The tool is flexible with seamless integration with popular HuggingFace models, high-throughput serving, tensor parallelism support, and streaming outputs. It supports NVIDIA GPUs and AMD GPUs, Prefix caching, and Multi-lora. vLLM is designed to provide fast and efficient LLM serving for everyone.

PromptChainer

PromptChainer is a powerful AI flow generation tool that allows users to create complex AI-driven flows with ease using a visual flow builder. It seamlessly integrates AI and traditional programming, enabling users to chain prompts and models and manage AI-generated insights on large-scale data effortlessly. With pre-built templates, a user-friendly database, and versatile logic nodes, PromptChainer empowers users to build custom flows or applications with infinite possibilities.

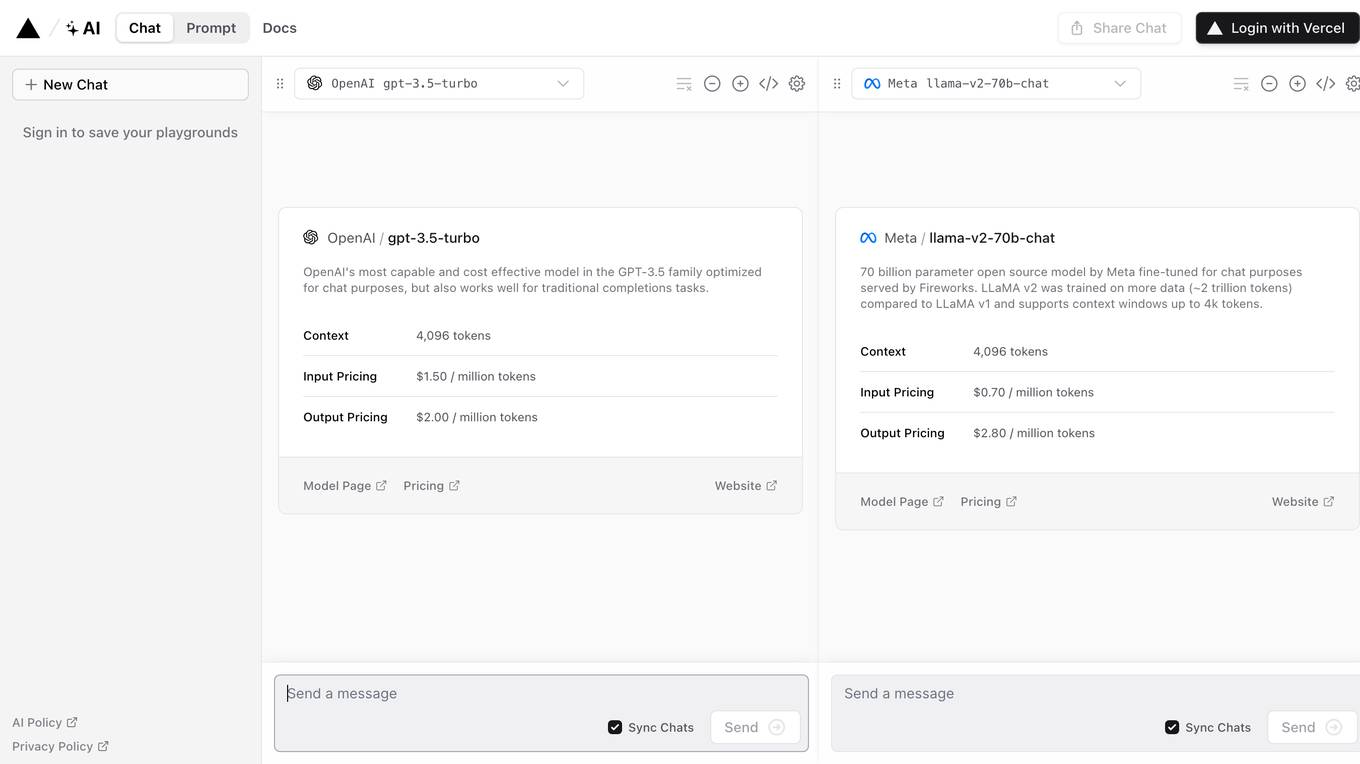

AI SDK

The AI SDK is a free open-source library designed to empower developers to build AI-powered products. Developed by the creators of Next.js, it offers a unified Provider API that allows users to easily switch between AI providers by changing a single line of code. With features like generative UI, framework-agnostic compatibility, and streaming AI responses, the AI SDK simplifies the process of integrating AI capabilities into applications. Trusted by prominent builders like OpenAI and Hugging Face, the AI SDK has received praise for its ease of use, speed of development, and comprehensive documentation.

FutureSmart AI

FutureSmart AI is a platform that provides custom Natural Language Processing (NLP) solutions. The platform focuses on integrating Mem0 with LangChain to enhance AI Assistants with Intelligent Memory. It offers tutorials, guides, and practical tips for building applications with large language models (LLMs) to create sophisticated and interactive systems. FutureSmart AI also features internship journeys and practical guides for mastering RAG with LangChain, catering to developers and enthusiasts in the realm of NLP and AI.

Infermatic.ai

Infermatic.ai is a platform that provides access to top Large Language Models (LLMs) with a user-friendly interface. It offers complete privacy, robust security, and scalability for projects, research, and integrations. Users can test, choose, and scale LLMs according to their content needs or business strategies. The platform eliminates the complexities of infrastructure management, latency issues, version control problems, integration complexities, scalability concerns, and cost management issues. Infermatic.ai is designed to be secure, intuitive, and efficient for users who want to leverage LLMs for various tasks.

Derwen

Derwen is an open-source integration platform for production machine learning in enterprise, specializing in natural language processing, graph technologies, and decision support. It offers expertise in developing knowledge graph applications and domain-specific authoring. Derwen collaborates closely with Hugging Face and provides strong data privacy guarantees, low carbon footprint, and no cloud vendor involvement. The platform aims to empower AI engineers and domain experts with quality, time-to-value, and ownership since 2017.

Promptly

Promptly is a generative AI platform designed for enterprises to build custom AI agents, applications, and chatbots without any coding experience. The platform allows users to seamlessly integrate their own data and GPT-powered models, supporting a wide variety of data sources. With features like model chaining, developer-friendly tools, and collaborative app building, Promptly empowers teams to quickly prototype and scale AI applications for various use cases. The platform also offers seamless integrations with popular workflows and tools, ensuring limitless possibilities for AI-powered solutions.

Janus Pro

Janus Pro is a free online AI image generator that leverages advanced multimodal processing to analyze and create high-quality images. It outperforms models like DALL-E 3 and Stable Diffusion, delivering exceptional detail and accuracy. Built on DeepSeek-LLM architecture with 7 billion parameters, Janus Pro features separate encoding pathways for enhanced flexibility. The application is freely available on Hugging Face, trained on millions of samples for multimodal understanding and visual generation.

Ainsider.store

Ainsider.store is an AI tool that offers AI Agents & Automation templates for various business needs. It provides workflows, guides, and resources in categories such as Prompt Engineering, Productivity, Personal Agents, Marketing, Knowledge Base, Ecommerce, and Content. Users can access a complete library of AI Automations and Agents by subscribing for $19.99 per month. The platform utilizes popular frameworks like Voiceflow, Botpress, n8n, and Hugging Face to build and customize agents for diverse scenarios.

Hugging Face

Hugging Face is an AI community platform that serves as a collaboration hub for the machine learning community. It allows users to explore and contribute to models, datasets, and applications. The platform offers a wide range of features and tools to facilitate AI development and research.

AIModels.fyi

AIModels.fyi is a website that helps users find the best AI model for their startup. The website provides a weekly rundown of the latest AI models and research, and also allows users to search for models by category or keyword. AIModels.fyi is a valuable resource for anyone looking to use AI to solve a problem.

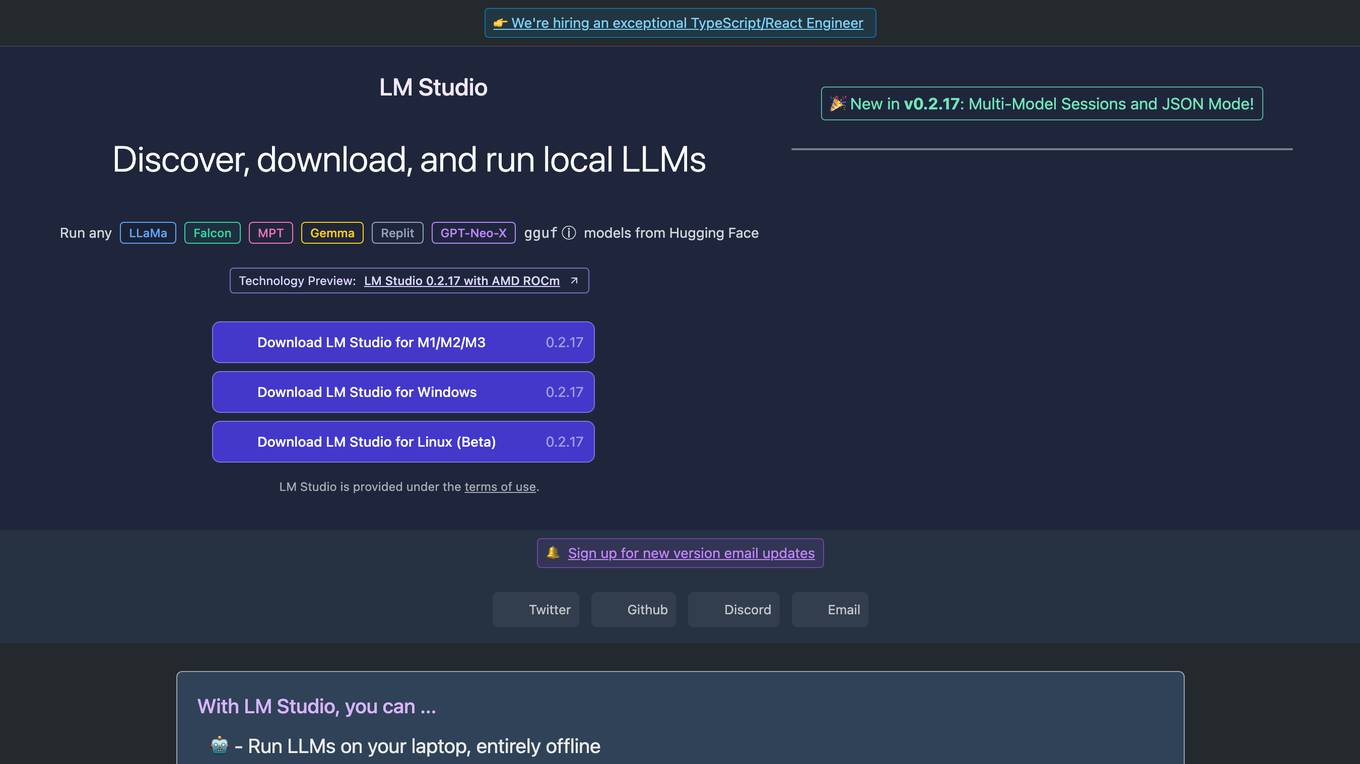

LM Studio

LM Studio is an AI tool designed for discovering, downloading, and running local LLMs (Large Language Models). Users can run LLMs on their laptops offline, use models through an in-app Chat UI or a local server, download compatible model files from HuggingFace repositories, and discover new LLMs. The tool ensures privacy by not collecting data or monitoring user actions, making it suitable for personal and business use. LM Studio supports various models like ggml Llama, MPT, and StarCoder on Hugging Face, with minimum hardware/software requirements specified for different platforms.

Mo Ai Jobs

Mo Ai Jobs is a job board for artificial intelligence (AI) professionals. It lists jobs in machine learning, engineering, research, data science, and other AI-related fields. The site is designed to help AI professionals find jobs at next-generation AI companies. Mo Ai Jobs is a valuable resource for anyone looking for a job in the AI industry.

Stable Diffusion XL

Stable Diffusion XL (SDXL) is the latest AI image generation model that can generate realistic faces, legible text within the images, and better image composition, all while using shorter and simpler prompts. It is an improved version of the previous Stable Diffusion models, with better photorealistic outputs, more detailed imagery, and improved face generation. SDXL is available via DreamStudio and other image generation apps like NightCafe Studio and ClipDrop. It can be used for a variety of tasks, including image generation, image-to-image prompting, inpainting, and outpainting.

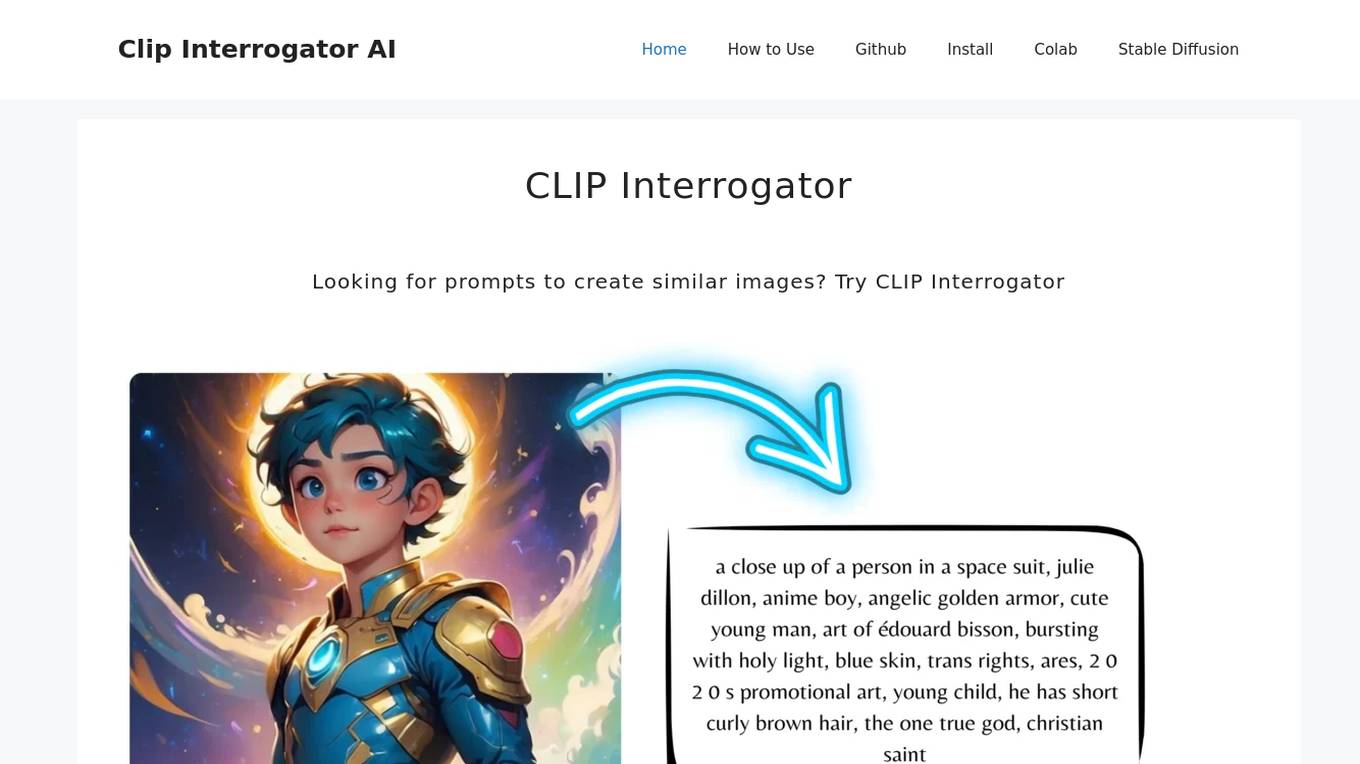

CLIP Interrogator

CLIP Interrogator is a tool that uses the CLIP (Contrastive Language–Image Pre-training) model to analyze images and generate descriptive text or tags. It effectively bridges the gap between visual content and language by interpreting the contents of images through natural language descriptions. The tool is particularly useful for understanding or replicating the style and content of existing images, as it helps in identifying key elements and suggesting prompts for creating similar imagery.

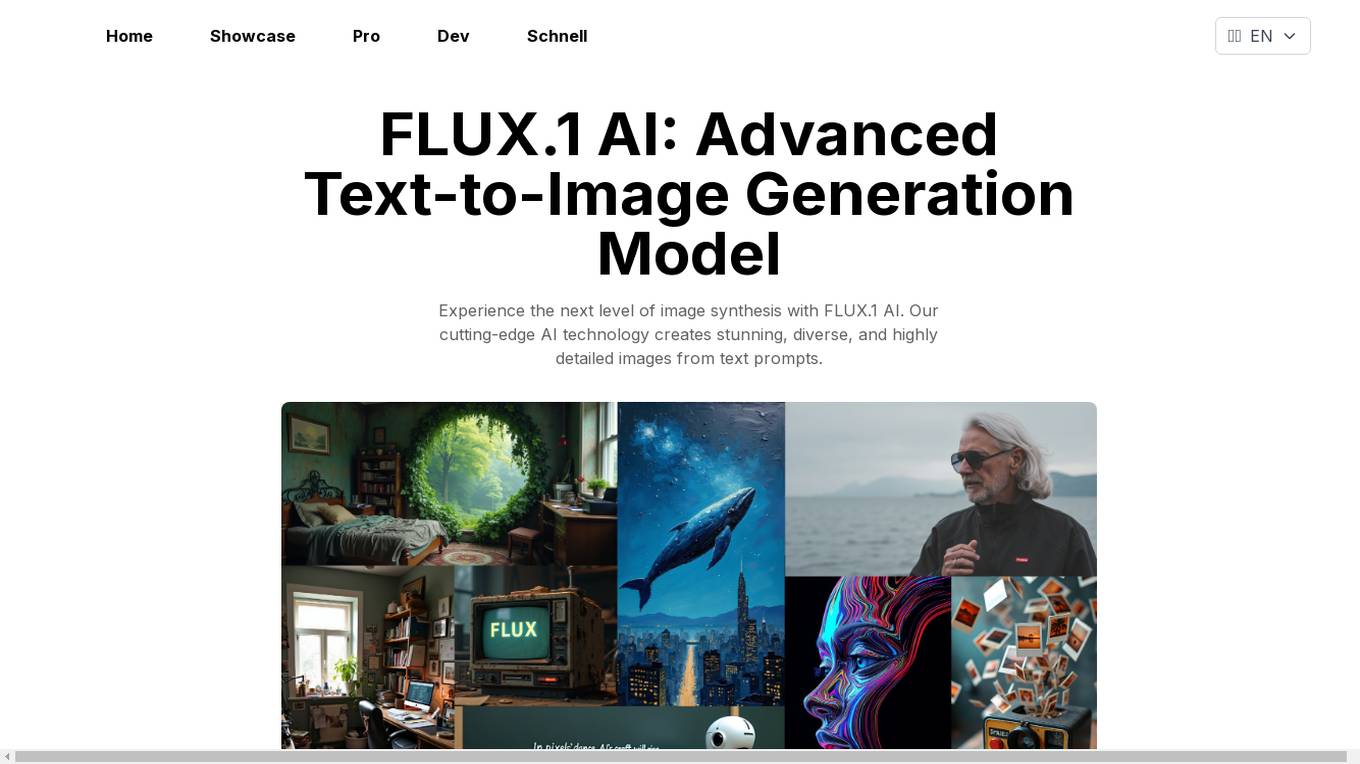

FLUX.1 AI

FLUX.1 AI is an advanced text-to-image generation model developed by Black Forest Labs. It utilizes cutting-edge AI technology to create stunning, diverse, and highly detailed images from text prompts. The application offers exceptional image quality, prompt adherence, style diversity, and scene complexity, setting new standards in text-to-image synthesis. FLUX.1 AI supports various aspect ratios and resolutions, providing flexibility in image creation. It is available in three versions: FLUX.1 [pro], FLUX.1 [dev], and FLUX.1 [schnell], each catering to different needs and access levels.

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

REPO MASTER

Expert at fetching repository information from GitHub, Hugging Face. and you local repositories

mlx-lm

MLX LM is a Python package designed for generating text and fine-tuning large language models on Apple silicon using MLX. It offers integration with the Hugging Face Hub for easy access to thousands of LLMs, support for quantizing and uploading models to the Hub, low-rank and full model fine-tuning capabilities, and distributed inference and fine-tuning with `mx.distributed`. Users can interact with the package through command line options or the Python API, enabling tasks such as text generation, chatting with language models, model conversion, streaming generation, and sampling. MLX LM supports various Hugging Face models and provides tools for efficient scaling to long prompts and generations, including a rotating key-value cache and prompt caching. It requires macOS 15.0 or higher for optimal performance.

ollama

Ollama is a lightweight, extensible framework for building and running language models on the local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be easily used in a variety of applications. Ollama is designed to be easy to use and accessible to developers of all levels. It is open source and available for free on GitHub.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

GPTQModel

GPTQModel is an easy-to-use LLM quantization and inference toolkit based on the GPTQ algorithm. It provides support for weight-only quantization and offers features such as dynamic per layer/module flexible quantization, sharding support, and auto-heal quantization errors. The toolkit aims to ensure inference compatibility with HF Transformers, vLLM, and SGLang. It offers various model supports, faster quant inference, better quality quants, and security features like hash check of model weights. GPTQModel also focuses on faster quantization, improved quant quality as measured by PPL, and backports bug fixes from AutoGPTQ.

AITreasureBox

AITreasureBox is a comprehensive collection of AI tools and resources designed to simplify and accelerate the development of AI projects. It provides a wide range of pre-trained models, datasets, and utilities that can be easily integrated into various AI applications. With AITreasureBox, developers can quickly prototype, test, and deploy AI solutions without having to build everything from scratch. Whether you are working on computer vision, natural language processing, or reinforcement learning projects, AITreasureBox has something to offer for everyone. The repository is regularly updated with new tools and resources to keep up with the latest advancements in the field of artificial intelligence.

chat-with-mlx

Chat with MLX is an all-in-one Chat Playground using Apple MLX on Apple Silicon Macs. It provides privacy-enhanced AI for secure conversations with various models, easy integration of HuggingFace and MLX Compatible Open-Source Models, and comes with default models like Llama-3, Phi-3, Yi, Qwen, Mistral, Codestral, Mixtral, StableLM. The tool is designed for developers and researchers working with machine learning models on Apple Silicon.

PhiCookBook

Phi Cookbook is a repository containing hands-on examples with Microsoft's Phi models, which are a series of open source AI models developed by Microsoft. Phi is currently the most powerful and cost-effective small language model with benchmarks in various scenarios like multi-language, reasoning, text/chat generation, coding, images, audio, and more. Users can deploy Phi to the cloud or edge devices to build generative AI applications with limited computing power.

achatbot

achatbot is a factory tool that allows users to create chat bots with various functionalities such as llm (language models), asr (automatic speech recognition), tts (text-to-speech), vad (voice activity detection), ocr (optical character recognition), and object detection. The tool provides a structured project with features like chat bots for cmd, grpc, and http servers. It supports various chat bot processors, transport connectors, and AI modules for different tasks. Users can run chat bots locally or deploy them on cloud services like vercel, Cloudflare, AWS Lambda, or Docker. The tool also includes UI components for easy deployment and service architecture diagrams for reference.

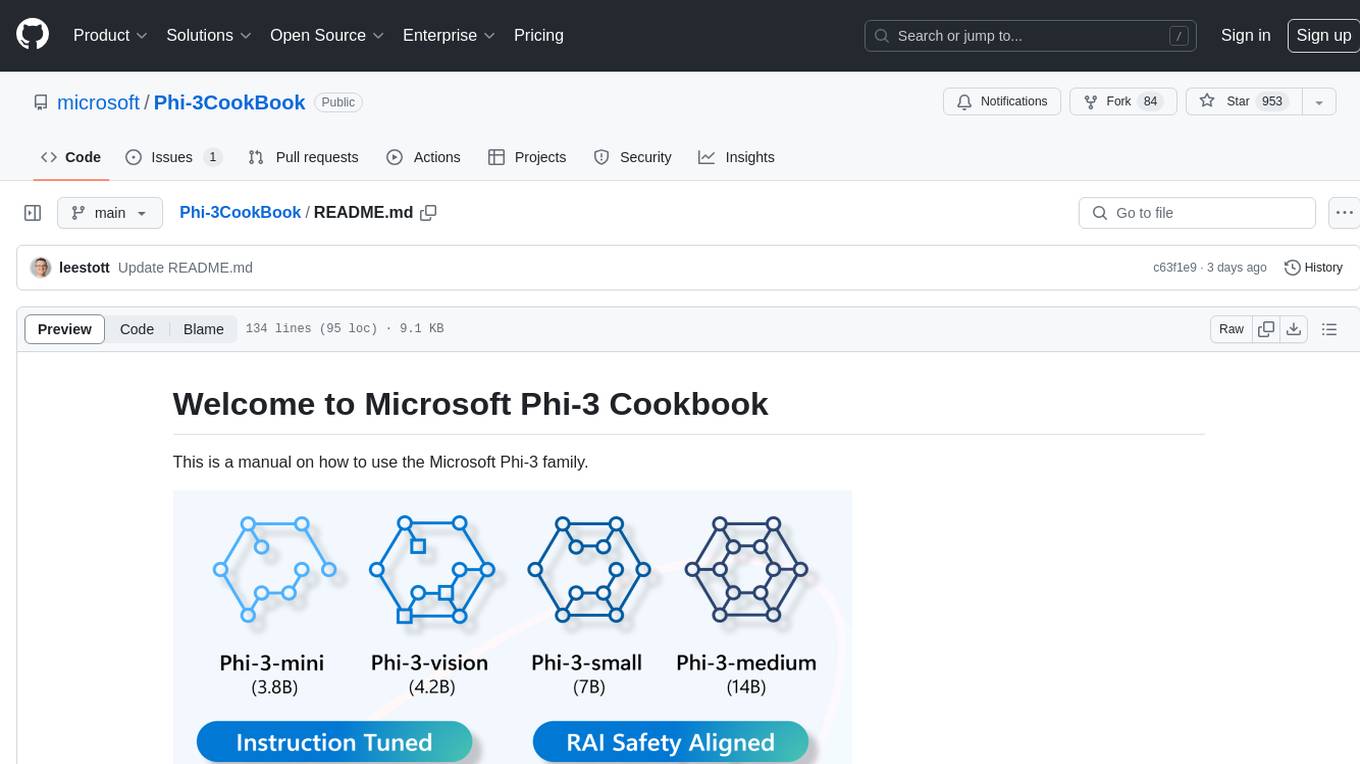

Phi-3CookBook

Phi-3CookBook is a manual on how to use the Microsoft Phi-3 family, which consists of open AI models developed by Microsoft. The Phi-3 models are highly capable and cost-effective small language models, outperforming models of similar and larger sizes across various language, reasoning, coding, and math benchmarks. The repository provides detailed information on different Phi-3 models, their performance, availability, and usage scenarios across different platforms like Azure AI Studio, Hugging Face, and Ollama. It also covers topics such as fine-tuning, evaluation, and end-to-end samples for Phi-3-mini and Phi-3-vision models, along with labs, workshops, and contributing guidelines.

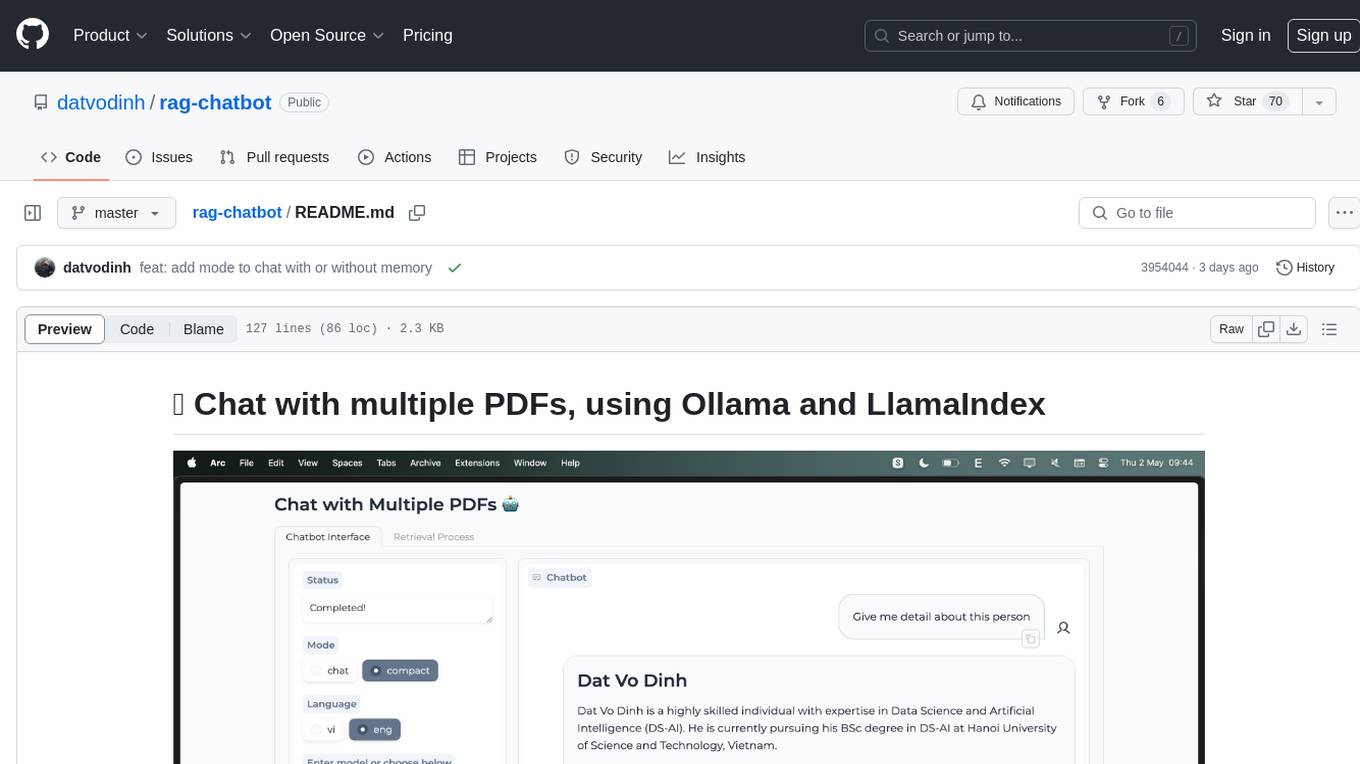

rag-chatbot

rag-chatbot is a tool that allows users to chat with multiple PDFs using Ollama and LlamaIndex. It provides an easy setup for running on local machines or Kaggle notebooks. Users can leverage models from Huggingface and Ollama, process multiple PDF inputs, and chat in multiple languages. The tool offers a simple UI with Gradio, supporting chat with history and QA modes. Setup instructions are provided for both Kaggle and local environments, including installation steps for Docker, Ollama, Ngrok, and the rag_chatbot package. Users can run the tool locally and access it via a web interface. Future enhancements include adding evaluation, better embedding models, knowledge graph support, improved document processing, MLX model integration, and Corrective RAG.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

green-bit-llm

Green-Bit-LLM is a Python toolkit designed for fine-tuning, inferencing, and evaluating GreenBitAI's low-bit Language Models (LLMs). It utilizes the Bitorch Engine for efficient operations on low-bit LLMs, enabling high-performance inference on various GPUs and supporting full-parameter fine-tuning using quantized LLMs. The toolkit also provides evaluation tools to validate model performance on benchmark datasets. Green-Bit-LLM is compatible with AutoGPTQ series of 4-bit quantization and compression models.

cactus

Cactus is an energy-efficient and fast AI inference framework designed for phones, wearables, and resource-constrained arm-based devices. It provides a bottom-up approach with no dependencies, optimizing for budget and mid-range phones. The framework includes Cactus FFI for integration, Cactus Engine for high-level transformer inference, Cactus Graph for unified computation graph, and Cactus Kernels for low-level ARM-specific operations. It is suitable for implementing custom models and scientific computing on mobile devices.

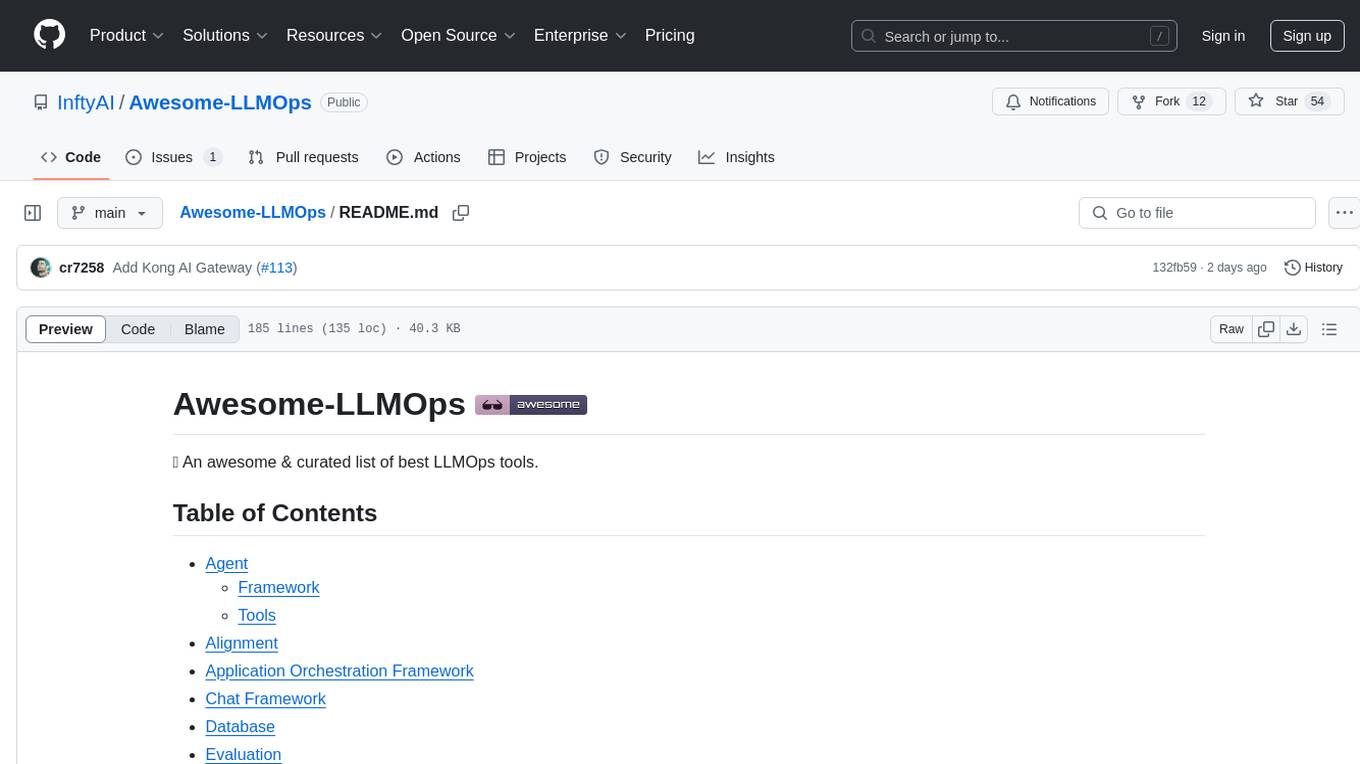

Awesome-LLMOps

Awesome-LLMOps is a curated list of the best LLMOps tools, providing a comprehensive collection of frameworks and tools for building, deploying, and managing large language models (LLMs) and AI agents. The repository includes a wide range of tools for tasks such as building multimodal AI agents, fine-tuning models, orchestrating applications, evaluating models, and serving models for inference. It covers various aspects of the machine learning operations (MLOps) lifecycle, from training to deployment and observability. The tools listed in this repository cater to the needs of developers, data scientists, and machine learning engineers working with large language models and AI applications.

LocalLLMClient

LocalLLMClient is a Swift package designed to interact with local Large Language Models (LLMs) on Apple platforms. It supports GGUF, MLX models, and the FoundationModels framework, providing streaming API, multimodal capabilities, and tool calling functionalities. Users can easily integrate this tool to work with various models for text generation and processing. The package also includes advanced features for low-level API control and multimodal image processing. LocalLLMClient is experimental and subject to API changes, offering support for iOS, macOS, and Linux platforms.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.